Forensic Collection from Proxmox VE

The following are some notes and a bit of a guide regarding collecting memory and disk from Proxmox Virtual Environment (hereafter PVE). There doesn't seem to be nearly as much information regarding best practices and potential pitfalls as there is for Hyper-V or ESXi. However, with the growing popularity of PVE, I can see forensic collections from this hypervisor becoming more of a priority.

I've tested the following (except where otherwise noted) in my home lab, and performed some minimal verification. For example, not only does it appear to dump memory, but the resulting file can actual be read from Volatility 3. I have not done extensive testing to make sure every expected artifact is there and every module runs.

If you find something that isn't correct, is different in a production environment, or there is a better method, please leave a note somewhere (here, the Proxmox forums, Reddit, etc.) where the community can find it with a search. I.e I don't care if you contribute to my work, but contribute to the community please.

For those not familiar with PVE, the VM ID numbers, usually starting at 100, are the equivalent to many other systems GUID/UUID. It's a unique numerical identifier for a system, and a lot of functions/files use this to identify the host in question. So when you see 100, 101, etc. listed below, that is just a reference to the system with ID number 100, or system 101, etc.

Memory

- Memory can be extracted from both running and paused systems on the command line using the following steps:

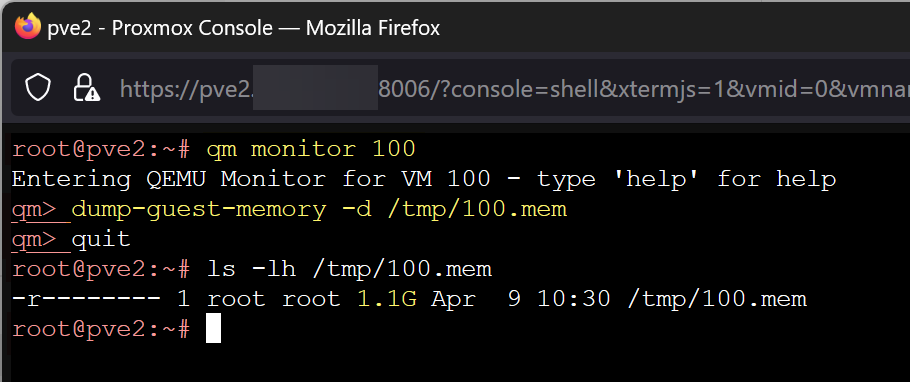

- "qm monitor [VMID]" e.g.

qm monitor 101 - "dump-guest-memory -d /tmp/[dumpname].mem" e.g.

dump-guest-memory -d /tmp/101-running.memhelp dump-guest-memoryto get options,quitto exit

- "qm monitor [VMID]" e.g.

- Both running and paused systems seem to provide the same physical size file output, both seem to contain network connections. I looked for these specifically as I know they can be broken in ESXi if the system is suspended. I don't see a significant difference in the output.

- There is also the hibernate option. This results in a [VMID]-state-suspended-[DATE].raw file being written in /dev/pve/. The file is a QCOW suspended disk state file (QEVM header) that contains the volatile memory as well as other data, but cannot be processed by Volatility (not sure about other tools) as is.

- The memory can be extracted with vol3's LaywerWriter plugin.

- Get a list of layers with "vol -f vm-101-state-suspend-2024-04-08.raw layerwriter.LayerWriter --list"

- Extract the memory layer with "vol -f vm-101-state-suspend-2024-04-08.raw layerwriter.LayerWriter --layers memory_layer"

- This resulted in a "memory_layer.raw" file that was approximately 2GB larger than the allocated memory (6 GB vs 4.2 GB with dump-guest-memory, host had 4GB assigned)

- Other than the size, the file did seem to have the the same network connections and was readable by Volatility 3.

- Given the larger file size and the need to extract the memory layer, I don't see an immediate benefit to using hibernate.

- The memory can be extracted with vol3's LaywerWriter plugin.

Disk Images

- There's no way to export these to your local system (e.g. right click -> download disk) via the web interface that I am aware of.

- Disks are probably (depending on setup and configuration) stored as block devices, not actual files on the file system (reference: https://forum.proxmox.com/threads/vm-disk-location-cant-find-the-vm-disk.101118/#post-436322).

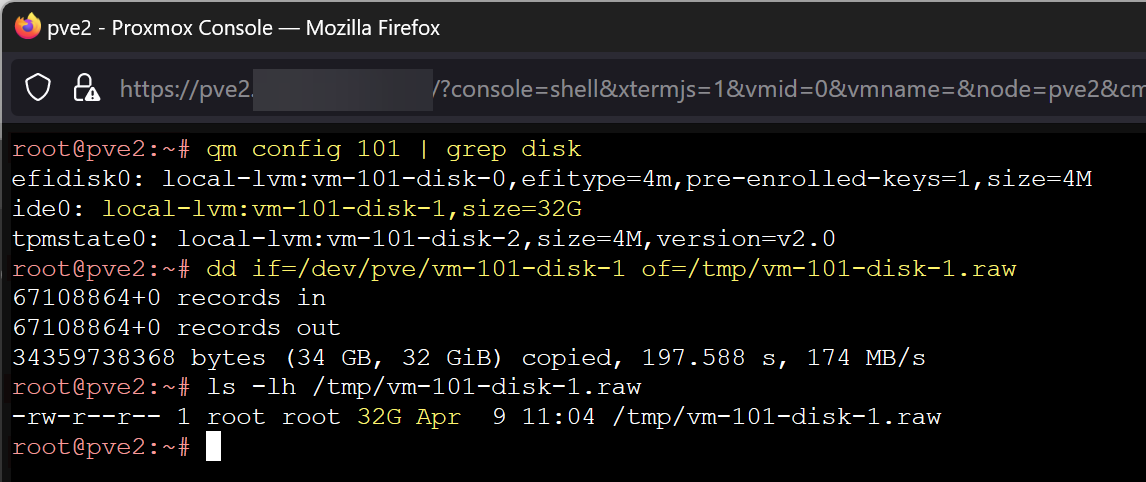

- However, block device disks can be written to a file. Find the primary disk name with "qm config [VMID]" e.g.

qm config 101 | grep disk- Disks for local/LVM storage should be in /dev/pve/, they may also be in

/mnt/pve/in enterprise environments with shared storage. Once you find the disk name, the find command could be used to locate the disk.

- Disks for local/LVM storage should be in /dev/pve/, they may also be in

- However, block device disks can be written to a file. Find the primary disk name with "qm config [VMID]" e.g.

- Copy the blockdevice to a physical file:

dd if=/dev/pve/vm-101-disk-1 of=/tmp/vm-101-disk-1.raw- This appears to work just fine while the VM is still running. I'm not sure if there would be any benefits or drawbacks to pausing or hibernating the VM first.

- The disk may also be stored as a standard file, again depending on setup and config. When using a NAS as my storage point, there is a .qcow2 (or file type of choice, VMDK is also an option) that could be easily obtained by browsing to that directory. More on the available storage types here: https://pve.proxmox.com/pve-docs/pve-admin-guide.html#_storage_types

- Alternatively, haven't tested this yet, but you should be able to go to Hardware -> Hard Disk -> Disk Actions -> Move Storage and move a block disk somewhere that it is accessible and has a format that will result in a typical file (e.g. qcow2/vmdk on a NAS).

Given what I've found so far, I'd probably:

- grab memory while the system is still running. I'm less worried about memory smearing than I am about losing something when pausing that I don't see because I'm not familiar enough with PVE.

- dd the disk image (running seems to work fine, not sure it matters here)

- zip both up and move them off the host with SCP

It's worth noting that PVE is Debian based and includes dd. However, if your preference is dc3dd, dcfldd, or you're missing any other tools of choice, apt install works just fine.